14 min read

MERJ and Vercel's research to demystify Google's rendering through empirical evidence.

Understanding how search engines crawl, render, and index web pages is crucial for optimizing sites for search engines. Over the years, as search engines like Google change their processes, it’s tough to keep track of what works and doesn’t—especially with client-side JavaScript.

We’ve noticed that a number of old beliefs have stuck around and kept the community unsure about best practices for application SEO:

“Google can’t render client-side JavaScript.”

“Google treats JavaScript pages differently.”

“Rendering queue and timing significantly impact SEO.”

“JavaScript-heavy sites have slower page discovery.”

To address these beliefs, we’ve partnered with MERJ, a leading SEO & data engineering consultancy, to conduct new experiments on Google’s crawling behavior. We analyzed over 100,000 Googlebot fetches across various sites to test and validate Google’s SEO capabilities.

Let's look at how Google's rendering has evolved. Then, we'll explore our findings and their real-world impact on modern web apps.

Article contents:

Link to headingThe evolution of Google’s rendering capabilities

Over the years, Google’s ability to crawl and index web content has significantly changed. Seeing this evolution is important to understand the current state of SEO for modern web applications.

Link to headingPre-2009: Limited JavaScript support

In the early days of search, Google primarily indexed static HTML content. JavaScript-generated content was largely invisible to search engines, leading to the widespread use of static HTML for SEO purposes.

Link to heading2009–2015: AJAX crawling scheme

Google introduced the AJAX crawling scheme, allowing websites to provide HTML snapshots of dynamically generated content. This was a stopgap solution that required developers to create separate, crawlable versions of their pages.

Link to heading2015–2018: Early JavaScript rendering

Google began rendering pages using a headless Chrome browser, marking a significant step forward. However, this older browser version still had limitations in processing modern JS features.

Link to heading2018–present: Modern rendering capabilities

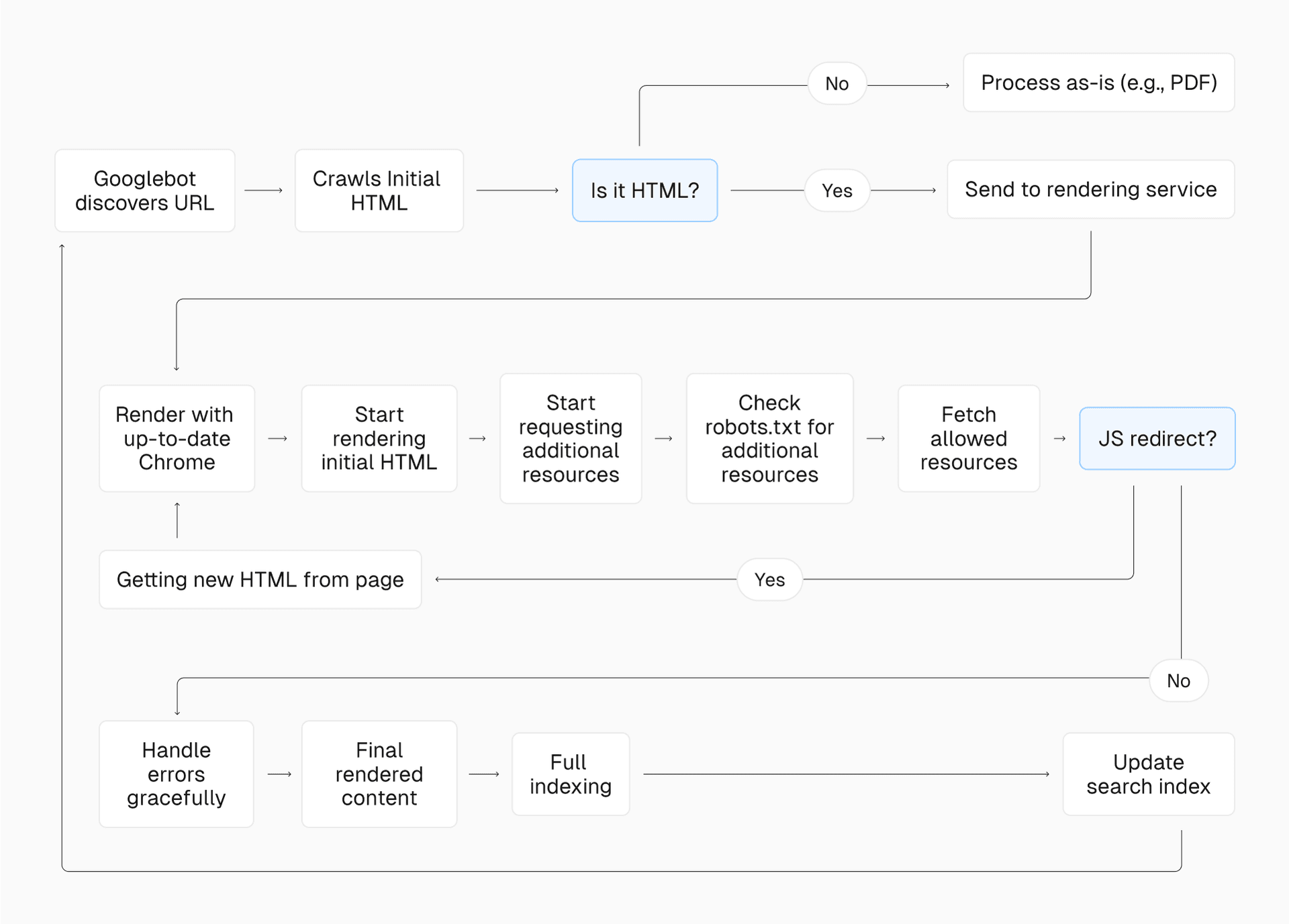

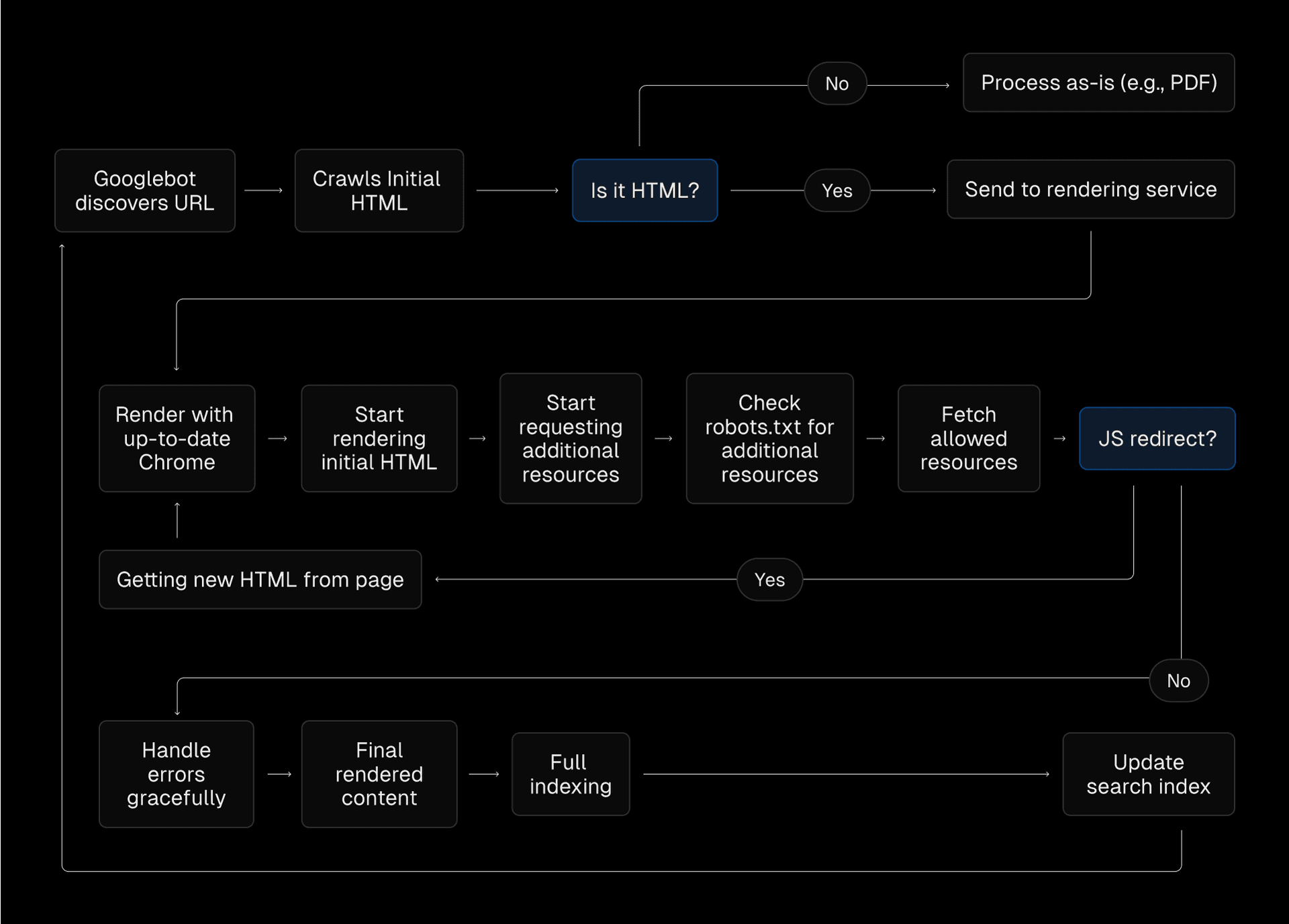

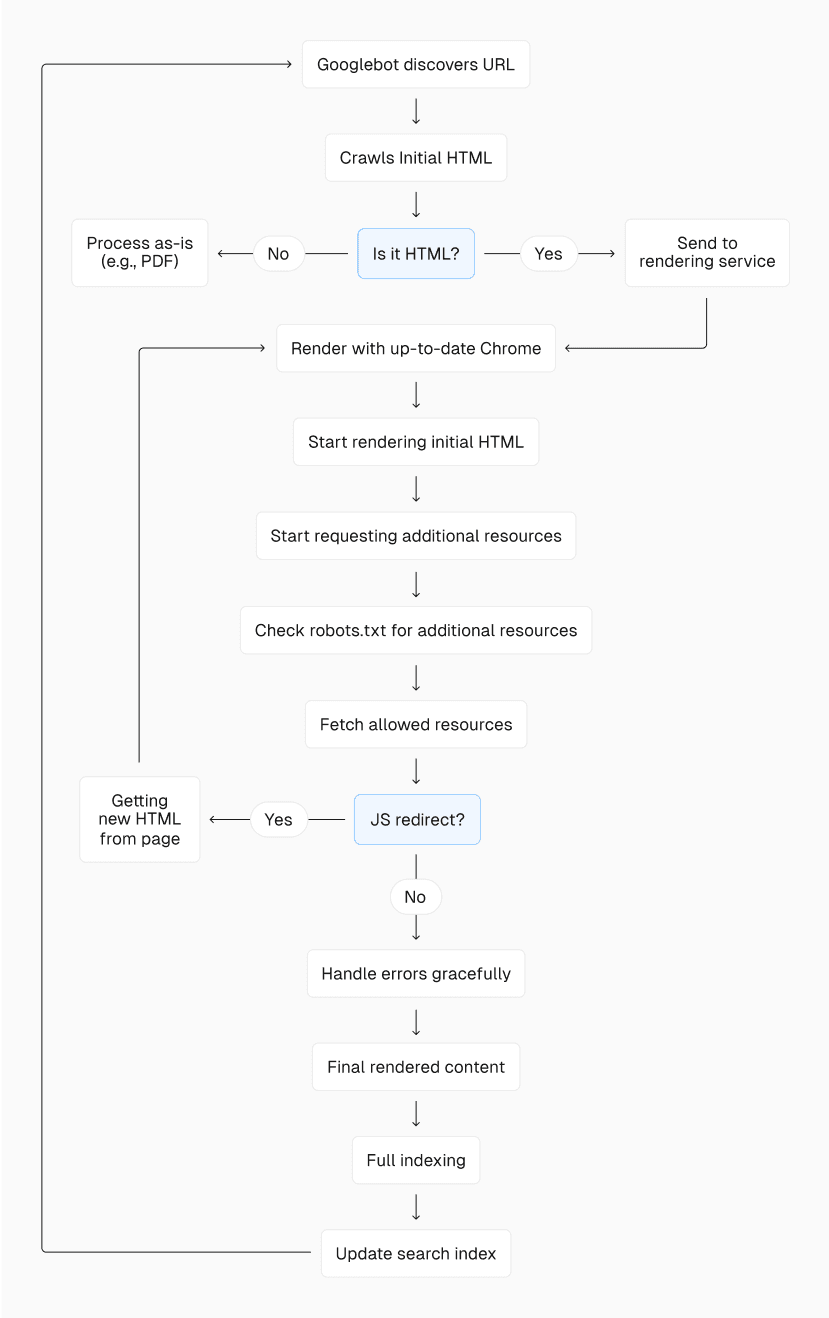

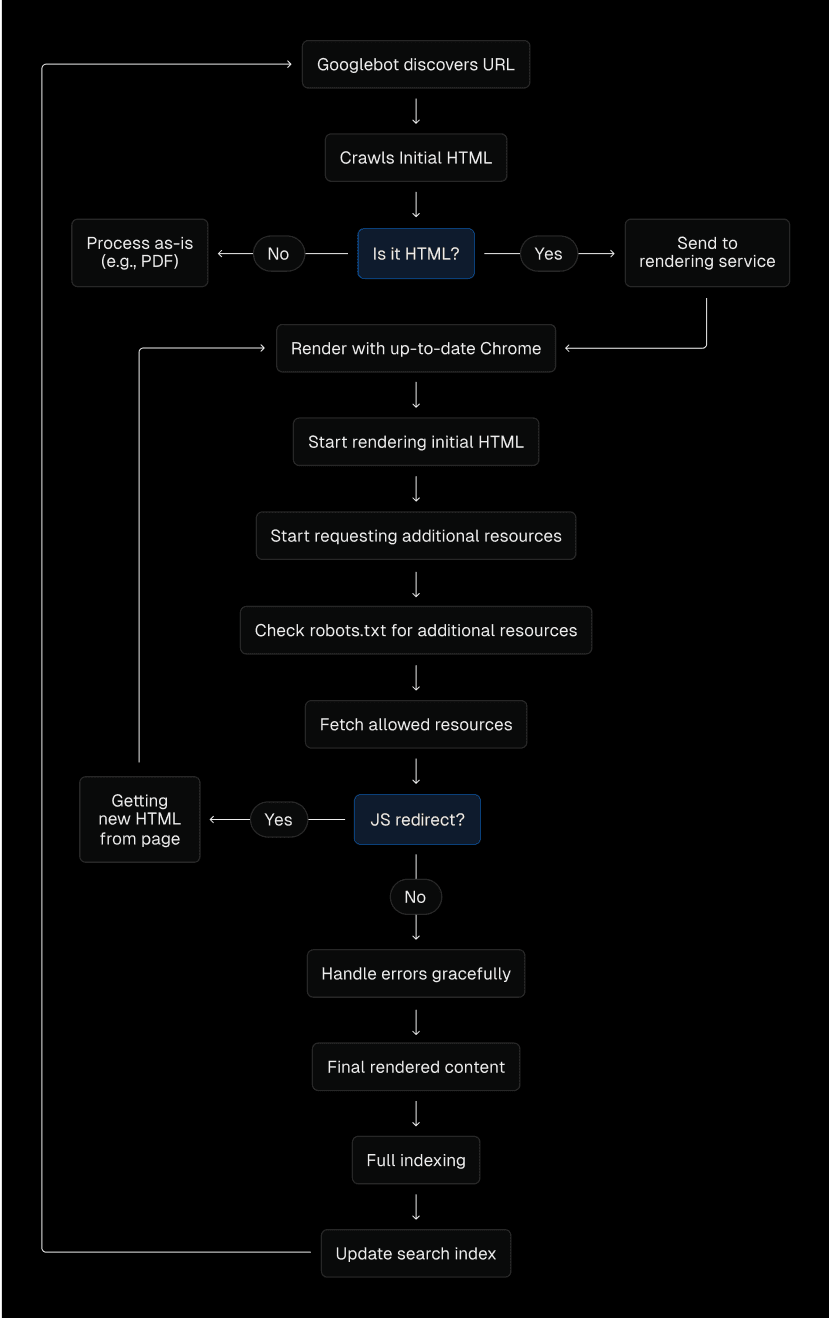

Today, Google uses an up-to-date version of Chrome for rendering, keeping pace with the latest web technologies. Key aspects of the current system include:

Universal rendering: Google now attempts to render all HTML pages, not just a subset.

Up-to-date browser: Googlebot uses the latest stable version of Chrome/Chromium, supporting modern JS features.

Stateless rendering: Each page render occurs in a fresh browser session, without retaining cookies or state from previous renders. Google will generally not click on items on the page, such as tabbed content or cookie banners.

Cloaking: Google prohibits showing different content to users and search engines to manipulate rankings. Avoid code that alters content based on

User-Agent. Instead, optimize your app's stateless rendering for Google, and implement personalization through stateful methods.Asset caching: Google speeds up webpage rendering by caching assets, which is useful for pages sharing resources and for repeated renderings of the same page. Instead of using HTTP

Cache-Controlheaders, Google's Web Rendering Service employs its own internal heuristics to determine when cached assets are still fresh and when they need to be downloaded again.

With a better understanding of what Google is capable of, let's look at some common myths and how they impact SEO.

Link to headingMethodology

To investigate the following myths, we conducted a study using Vercel’s infrastructure and MERJ’s Web Rendering Monitor (WRM) technology. Our research focused on nextjs.org, with supplemental data from monogram.io and basement.io, spanning from April 1 to April 30, 2024.

Link to headingData collection

We placed a custom Edge Middleware on these sites to intercept and analyze requests from search engine bots. This middleware allowed us to:

Identify and track requests from various search engines and AI crawlers. (No user data was included in this query.)

Inject a lightweight JavaScript library in HTML responses for bots.

The JavaScript library, triggered when a page finished rendering, sent data back to a long-running server, including:

The page URL.

The unique request identifier (to match the page rendering against regular server access logs).

The timestamp of the rendering completion (this is calculated using the JavaScript Library request reception time on the server).

Link to headingData analysis

By comparing the initial request present in server access logs with the data sent from our middleware to an external beacon server, we could:

Confirm which pages were successfully rendered by search engines.

Calculate the time difference between the initial crawl and the completed render.

Analyze patterns in how different types of content and URLs were processed.

Link to headingData scope

For this article, we primarily focused on data from Googlebot, which provided the largest and most reliable dataset. Our analysis included over 37,000 rendered HTML pages matched with server-beacon pairs, giving us a robust sample from which to draw conclusions.

We are still gathering data about other search engines, including AI providers like OpenAI and Anthropic, and hope to talk more about our findings in the future.

In the following sections, we’ll dive into each myth, providing more relevant methodology as necessary.

Link to headingMyth 1: “Google can’t render JavaScript content”

This myth has led many developers to avoid JS frameworks or resort to complex workarounds for SEO.

Link to headingThe test

To test Google’s ability to render JavaScript content, we focused on three key aspects:

JS framework compatibility: We analyzed Googlebot's interactions with Next.js using data from

nextjs.org, which uses a mix of static prerendering, server-side rendering, and client-side rendering.Dynamic content indexing: We examined pages on

nextjs.orgthat load content asynchronously via API calls. This allowed us to determine if Googlebot could process and index content not present in the initial HTML response.Streamed content via React Server Components (RSCs): Similar to the above, much of

nextjs.orgis built with the Next.js App Router and RSCs. We could see how Googlebot processed and indexed content incrementally streamed to the page.Rendering success rate: We compared the number of Googlebot requests in our server logs to the number of successful rendering beacons received. This gave us insight into what percentage of crawled pages were fully rendered.

Link to headingOur findings

Out of over 100,000 Googlebot fetches analyzed on

nextjs.org, excluding status code errors and non-indexable pages, 100% of HTML pages resulted in full-page renders, including pages with complex JS interactions.All content loaded asynchronously via API calls was successfully indexed, demonstrating Googlebot's ability to process dynamically loaded content.

Next.js, a React-based framework, was fully rendered by Googlebot, confirming compatibility with modern JavaScript frameworks.

Streamed content via RSCs was also fully rendered, confirming that streaming does not adversely impact SEO.

Google attempts to render virtually all HTML pages it crawls, not just a subset of JavaScript-heavy pages.

Link to headingMyth 2: “Google treats JavaScript pages differently”

A common misconception is that Google has a separate process or criteria for JavaScript-heavy pages. Our research, combined with official statements from Google, debunks this myth.

Link to headingThe test

To test where Google treats JS-heavy pages differently, we took several targeted approaches:

CSS

@importtest: We created a test page without JavaScript, but with a CSS file that@importsa second CSS file (which would only be downloaded and present in server logs upon rendering the first CSS file). By comparing this behavior to JS-enabled paged, we could verify if Google’s renderer processes CSS any differently with and without JS enabled.Status code and meta tag handling: We developed a Next.js application with middleware to test various HTTP status codes with Google. Our analysis focused on how Google processes pages with different status codes (200, 304, 3xx, 4xx, 5xx) and those with

noindexmeta tags. This helped us understand if JavaScript-heavy pages are treated differently in these scenarios.JavaScript complexity analysis: We compared Google's rendering behavior across pages with varying levels of JS complexity on nextjs.org. This included pages with minimal JS, those with moderate interactivity, and highly dynamic pages with extensive client-side rendering. We also calculated and compared the time between the initial crawl and the completed render to see if more complex JS led to longer rendering queues or processing times.

Link to headingOur findings

Our CSS

@importtest confirmed that Google successfully renders pages with or without JS.Google renders all 200 status HTML pages, regardless of JS content. Pages with 304 status are rendered using the content of the original 200 status page. Pages with other 3xx, 4xx, and 5xx errors were not rendered.

Pages with

noindexmeta tags in the initial HTML response were not rendered, regardless of JS content. Client-side removal ofnoindextags is not effective for SEO purposes; if a page contains thenoindextag in the initial HTML response, it won't be rendered, and the JavaScript that removes the tag won't be executed.We found no significant difference in Google’s success rate in rendering pages with varying levels of JS complexity. At

nextjs.org's scale, we also found no correlation between JavaScript complexity and rendering delay. However, more complex JS on a much larger site can impact crawl efficiency.

Link to headingMyth 3: “Rendering queue and timing significantly impact SEO

Many SEO practitioners believe that JavaScript-heavy pages face significant delays in indexing due to a rendering queue. Our research provides a clearer view of this process.

Link to headingThe test

To address the impact of rendering queue and timing on SEO, we investigated:

Rendering delays: We examined the time difference between Google's initial crawl of a page and its completion of rendering, using data from over 37,000 matched server-beacon pairs on

nextjs.org.URL types: We analyzed rendering times for URLs with and without query strings, as well as for different sections of

nextjs.org(e.g.,/docs,/learn,/showcase).Frequency patterns: We looked at how often Google re-renders pages and if there were patterns in rendering frequency for different types of content.

Link to headingOur findings

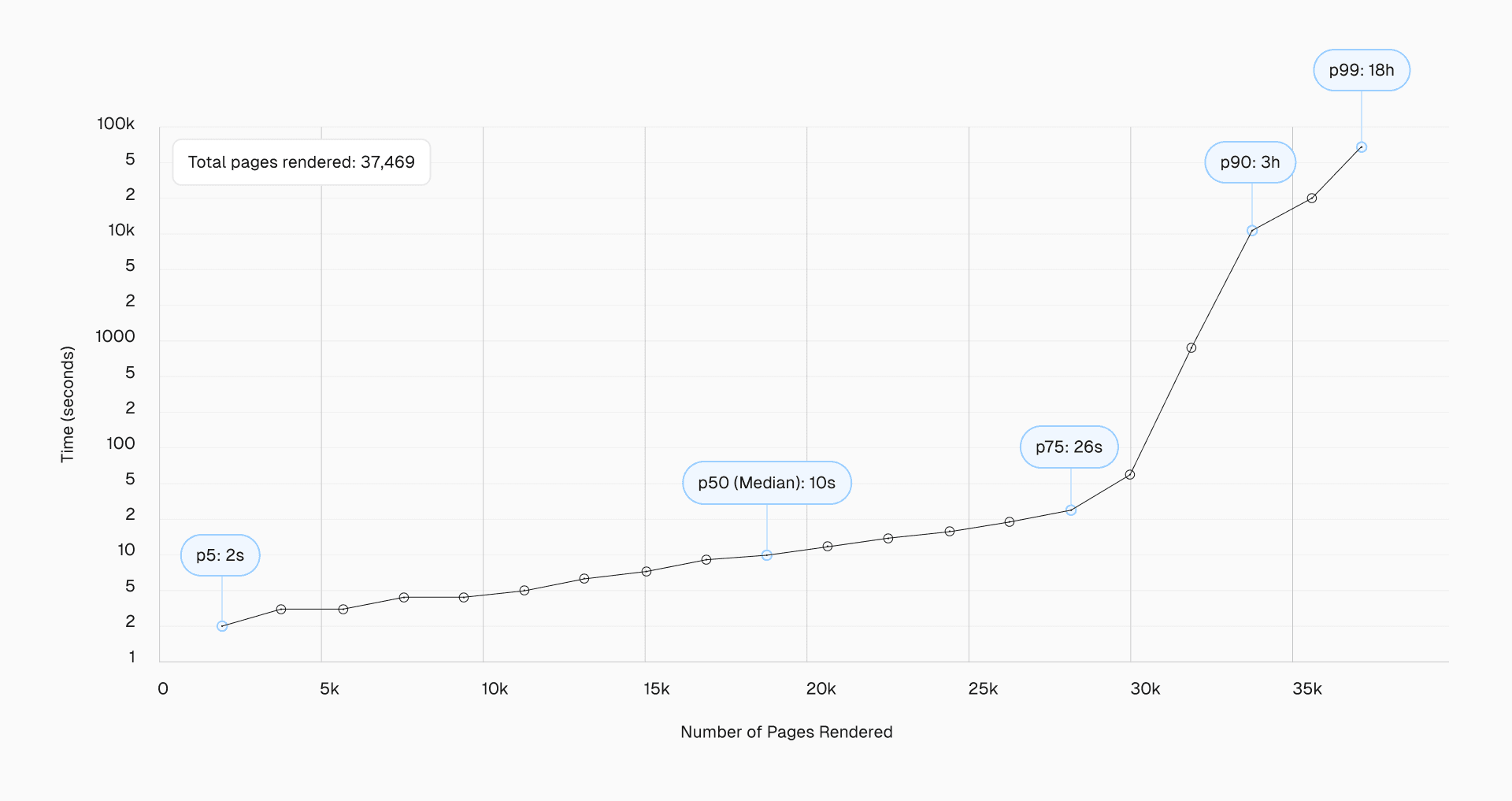

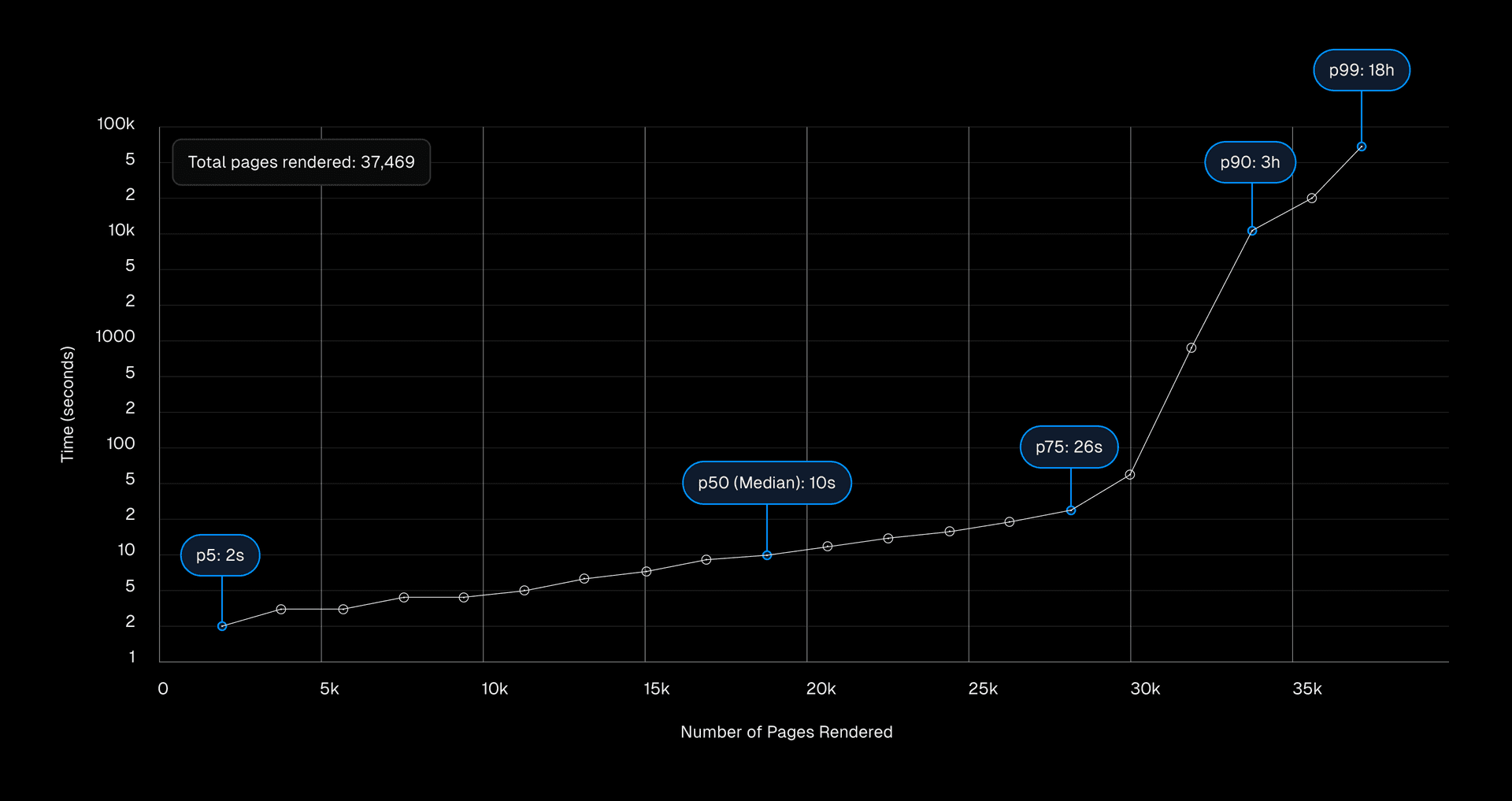

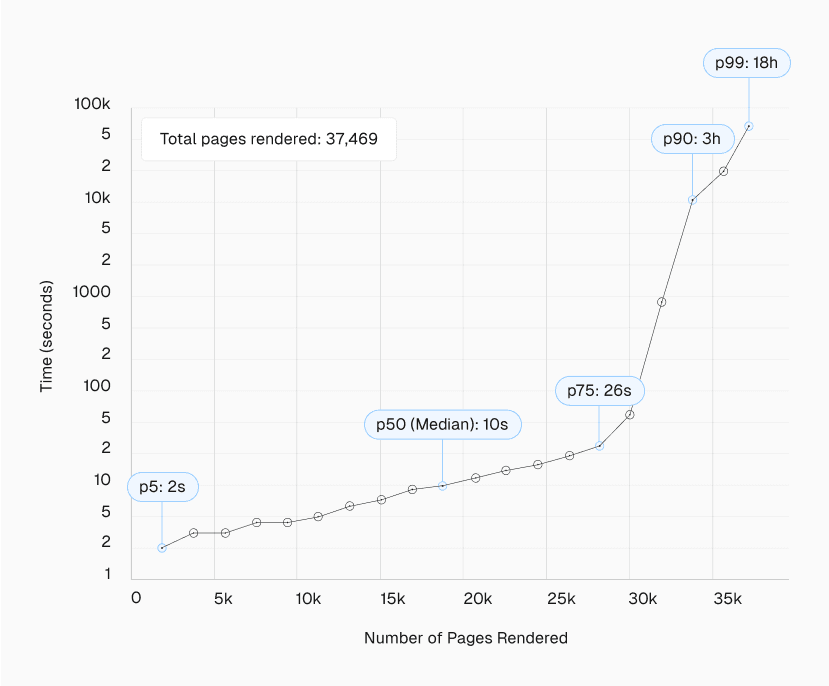

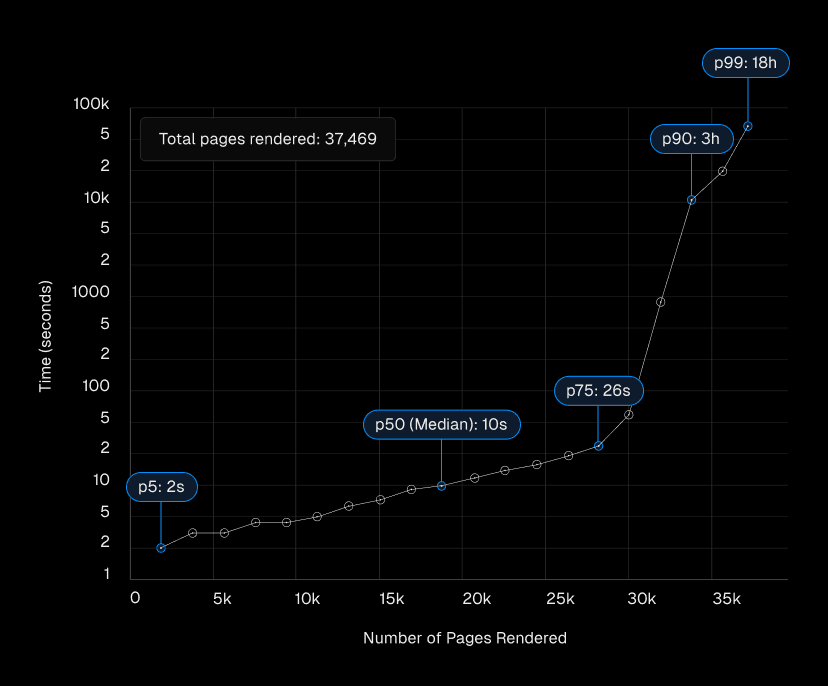

The rendering delay distribution was as follows:

50th percentile (median): 10 seconds.

75th percentile: 26 seconds

90th percentile: ~3 hours

95th percentile: ~6 hours

99th percentile: ~18 hours

Surprisingly, the 25th percentile of pages were rendered within 4 seconds of the initial crawl, challenging the notion of a long “queue.”

While some pages faced significant delays (up to ~18 hours at the 99th percentile), these were the exception and not the rule.

We also observed interesting patterns related to how quickly Google renders URLs with query strings (?param=xyz):

This data suggests that Google treats URLs differently if they have query strings that don't affect the content. For example, on nextjs.org, pages with ?ref= parameters experienced longer rendering delays, especially at higher percentiles.

Additionally, we noticed that frequently updated sections like /docs had shorter median rendering times compared to more static sections. For example, the /showcase page, despite being frequently linked, showed longer rendering times, suggesting that Google may slow down re-rendering for pages that don't change significantly.

Link to headingMyth 4: “JavaScript-heavy sites have slower page discovery”

A persistent belief in the SEO community is that JavaScript-heavy sites, especially those relying on client-side rendering (CSR) like Single Page Applications (SPAs), suffer from slower page discovery by Google. Our research provides new insights here.

Link to headingThe test

To investigate the impact of JavaScript on page discovery, we:

Analyzed link discovery in different rendering scenarios: We compared how quickly Google discovered and crawled links in server-rendered, statically generated, and client-side rendered pages on nextjs.org.

Tested non-rendered JavaScript payloads: We added a JSON object similar to a React Server Component (RSC) payload to the

/showcasepage of nextjs.org, containing links to new, previously undiscovered pages. This allowed us to test if Google could discover links in JavaScript data that wasn’t rendered.Compared discovery times: We monitored how quickly Google discovered and crawled new pages linked in different ways: standard HTML links, links in client-side rendered content, and links in non-rendered JavaScript payloads.

Link to headingOur findings

Google successfully discovered and crawled links in fully rendered pages, regardless of rendering method.

Google can discover links in non-rendered JavaScript payloads on the page, such as those in React Server Components or similar structures.

In both initial and rendered HTML, Google processes content by identifying strings that look like URLs, using the current host and port as a base for relative URLs. (Google did not discover an encoded URL—i.e.,

https%3A%2F%2Fwebsite.com—in our RSC-like payload, suggesting its link parsing is very strict.)The source and format of a link (e.g., in an

<a>tag or embedded in a JSON payload) did not impact how Google prioritized its crawl. Crawl priority remained consistent regardless of whether a URL was found in the initial crawl or post-rendering.While Google successfully discovers links in CSR pages, these pages do need to be rendered first. Server-rendered pages or partially pre-rendered pages have a slight advantage in immediate link discovery.

Google differentiates between link discovery and link value assessment. The evaluation of a link's value for site architecture and crawl prioritization occurs after full-page rendering.

Having an updated

sitemap.xmlsignificantly reduces, if not eliminates, the time-to-discovery differences between different rendering patterns.

Link to headingOverall implications and recommendations

Our research has debunked several common myths about Google's handling of JavaScript-heavy websites. Here are the key takeaways and actionable recommendations:

Link to headingImplications

JavaScript compatibility: Google can effectively render and index JavaScript content, including complex SPAs, dynamically loaded content, and streamed content.

Rendering parity: There's no fundamental difference in how Google processes JavaScript-heavy pages compared to static HTML pages. All pages are rendered.

Rendering queue reality: While a rendering queue exists, its impact is less significant than previously thought. Most pages are rendered within minutes, not days or weeks.

Page discovery: JavaScript-heavy sites, including SPAs, are not inherently disadvantaged in page discovery by Google.

Content timing: When certain elements (like

noindextags) are added to the page is crucial, as Google may not process client-side changes.Link value assessment: Google differentiates between link discovery and link value assessment. The latter occurs after full-page rendering.

Rendering prioritization: Google's rendering process isn't strictly first-in-first-out. Factors like content freshness and update frequency influence prioritization more than JavaScript complexity.

Rendering performance and crawl budget: While Google can effectively render JS-heavy pages, the process is more resource-intensive compared to static HTML, both for you and Google. For large sites (10,000+ unique and frequently changing pages), this can impact the site’s crawl budget. Optimizing application performance and minimizing unnecessary JS can help speed up the rendering process, improve crawl efficiency, and potentially allow more of your pages to be crawled, rendered, and indexed.

Link to headingRecommendations

Embrace JavaScript: Leverage JavaScript frameworks freely for enhanced user and developer experiences, but prioritize performance and adhere to Google's best practices for lazy-loading.

Error handling: Implement error boundaries in React applications to prevent total render failures due to individual component errors.

Critical SEO elements: Use server-side rendering or static generation for critical SEO tags and important content to ensure they're present in the initial HTML response.

Resource management: Ensure critical resources for rendering (APIs, JavaScript files, CSS files) are not blocked by

robots.txt.Content updates: For content that needs to be quickly re-indexed, ensure changes are reflected in the server-rendered HTML, not just in client-side JavaScript. Consider strategies like Incremental Static Regeneration to balance content freshness with SEO and performance.

Internal linking and URL structure: Create a clear, logical internal linking structure. Implement important navigational links as real HTML anchor tags (

<a href="...">) rather than JavaScript-based navigation. This approach aids both user navigation and search engine crawling efficiency while potentially reducing rendering delays.Sitemaps: Use and regularly update sitemaps. For large sites or those with frequent updates, use the

<lastmod>tag in XML sitemaps to guide Google's crawling and indexing processes. Remember to update the<lastmod>only when a significant content update occurs.Monitoring: Use Google Search Console's URL Inspection Tool or Rich Results Tool to verify how Googlebot sees your pages. Monitor crawl stats to ensure your chosen rendering strategy isn't causing unexpected issues.

Link to headingMoving forward with new information

As we’ve explored, there are some differences between rendering strategies when it comes to Google’s abilities:

* Having an updated sitemap.xml significantly reduces, if not eliminates, the time-to-discovery differences between different rendering patterns.

** Rendering in Google usually doesn't fail, as proven in our research; when it does, it's often due to blocked resources in robots.txt or specific edge cases.

These fine-grained differences exist, but Google will quickly discover and index your site regardless of rendering strategy. Focus on creating performant web applications that benefit users more than worrying about special accommodations for Google's rendering process.

After all, page speed is still a ranking factor, since Google’s page experience ranking system evaluates the performance of your site based on Google’s Core Web Vitals metrics.

Plus, page speed is linked to good user experience—with every 100ms of load time saved correlated to an 8% uptick in website conversion. Fewer users bouncing off your page means Google treats it as more relevant. Performance compounds; milliseconds matter.

Link to headingFurther resources

To learn more about these topics, we recommend:

How Core Web Vitals affect your SEO: Provides a comprehensive overview of how Core Web Vitals (CWVs) affect SEO, explaining Google's page experience ranking system and the difference between field data (used for ranking) and lab data (Lighthouse scores).

How to choose the right rendering strategy: Guides developers in choosing optimal rendering strategies for web applications, explaining various methods like SSG, ISR, SSR, and CSR, their use cases, and implementation considerations using Next.js.

The user experience of the Frontend Cloud: Explains how Vercel's Frontend Cloud enables fast, personalized web experiences by combining advanced caching strategies, Edge Network capabilities, and flexible rendering options to optimize both user experience and developer productivity.

Trusted by performance-critical applications.

Next.js and Vercel automatically optimize the performance of your application to meet today’s high standards. We can walk you through how it works for your application.

Contact Us

Link to headingAbout MERJ

MERJ is a leading SEO and data engineering consultancy specializing in technical SEO and performance optimization for complex web applications.

With a track record of success across various industries, MERJ brings cutting-edge expertise to help businesses navigate the ever-evolving landscape of search engine optimization.

If you need assistance with any of the SEO topics raised in this research, or if you're looking to optimize your web application for better search visibility and performance, don't hesitate to contact MERJ.