2 min read

The Model Context Protocol (MCP) standardizes how to build integrations for AI models. We built the MCP adapter to help developers create their own MCP servers using popular frameworks such as Next.js, Nuxt, and SvelteKit. Production apps like Zapier, Composio, Vapi, and Solana use the MCP adapter to deploy their own MCP servers on Vercel, and they've seen substantial growth in the past month.

MCP has been adopted by popular clients like Cursor, Claude, and Windsurf. These now support connecting to MCP servers and calling tools. Companies create their own MCP servers to make their tools available in the ecosystem.

The growing adoption of MCP shows its importance, but scaling MCP servers reveals limitations in the original design. Let's look at how the MCP specification has evolved, and how the MCP adapter can help.

Link to headingWhat's changed in MCP

When the first version of the MCP specification was published in November 2024, it supported Standard IO (stdio) and Server-Sent Events (SSE) as methods of transport between clients and servers. Stdio required running the MCP server locally, while SSE allowed servers to be hosted and accessed remotely.

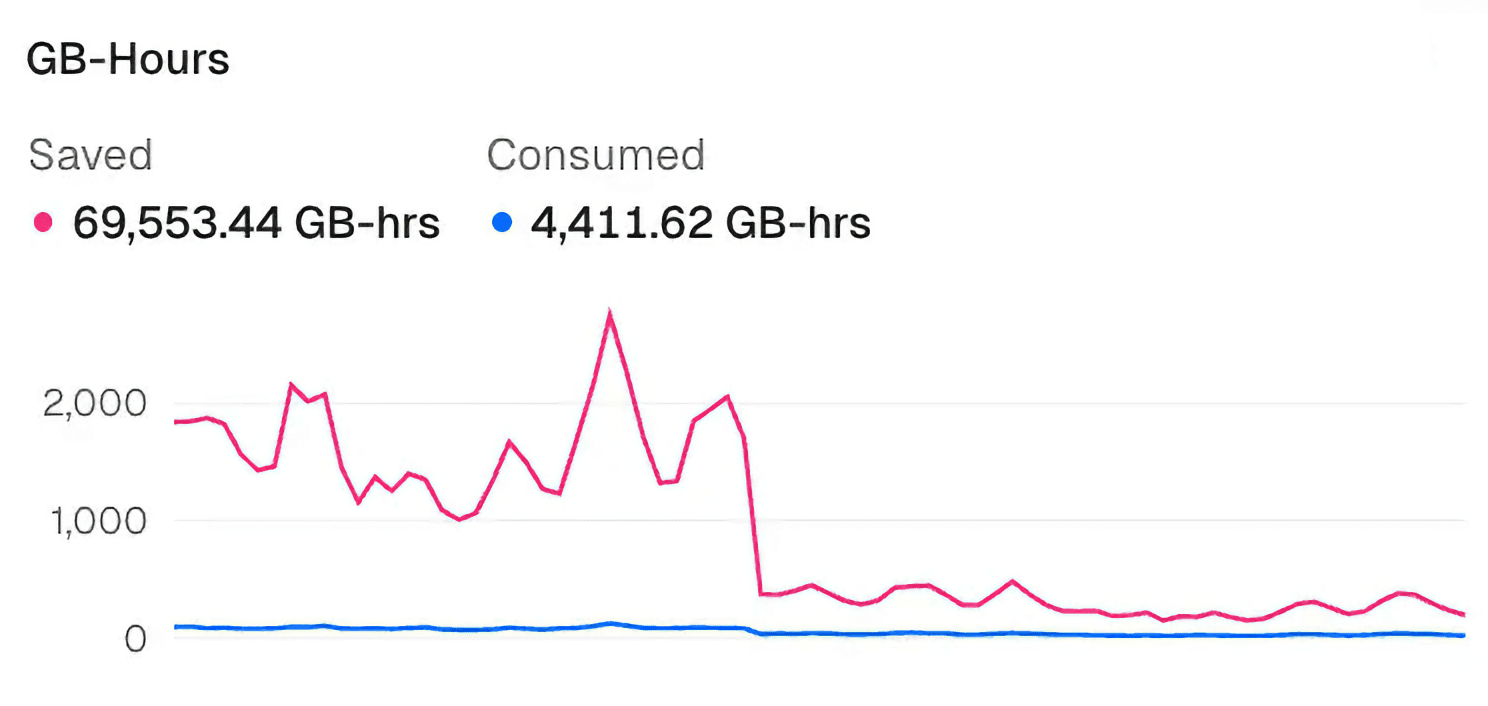

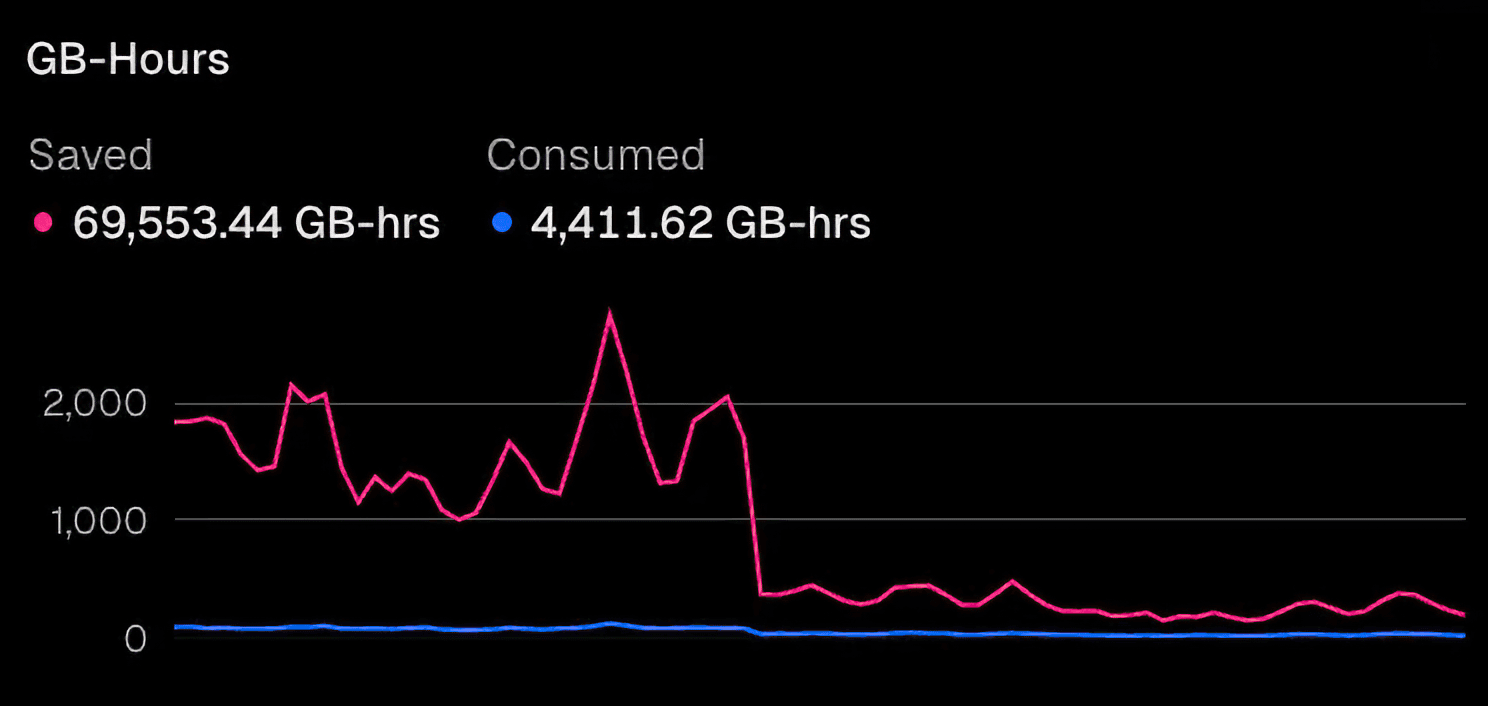

However, SSE is an inefficient transport. It keeps a persistent connection open between client and server, even when idle. While Vercel's Fluid compute reduces overhead by reusing already allocated servers, SSE remains an unsustainable choice for MCP servers at scale.

Link to headingA new MCP server transport: Streamable HTTP

In March 2025, a new version of the MCP specification introduced Streamable HTTP as the recommended transport, replacing SSE. This change improves efficiency by removing the need for persistent client-server connections.

Existing investments and lack of resources have slowed the adoption of Streamable HTTP by both MCP client developers and MCP server developers. As a result, many existing MCP clients continue to support only stdio and SSE.

Server developers building with the Vercel MCP adapter get built-in support for both Streamable HTTP and SSE, with the option to disable SSE, to fully align with the officially supported and more efficient transport.

import { createMcpHandler } from '@vercel/mcp-adapter';

const handler = createMcpHandler(server => { server.tool( 'roll_dice', 'Rolls an N-sided die', { sides: z.number().int().min(2) }, async ({ sides }) => { const value = 1 + Math.floor(Math.random() * sides); return { content: [{ type: 'text', text: `🎲 You rolled a ${value}!` }] }; } );});

export { handler as GET, handler as POST, handler as DELETE };An example MCP server with a single tool call.

Link to headingOptimizing MCP servers for efficiency

Streamable HTTP, with its standard HTTP transport and support for both stateless and stateful server models, is the clear path forward for remote-hosted MCP servers.

To support clients that do not yet handle Streamable HTTP, the mcp-remote package can proxy it over stdio. With a small server setup change, like the approach used by Solana, developers can benefit from Streamable HTTP's efficiency without waiting for full client support.

This approach provides forward compatibility while client support continues to evolve. In most cases, mcp-remote will no longer be needed once native support for Streamable HTTP is more widely adopted.

One MCP server deployed on Vercel was able to cut over to Streamable HTTP completely and cut CPU usage in half, even with continued user growth.

Link to headingBuilding for the future

The MCP ecosystem is evolving quickly. With Fluid compute and the MCP adapter, you can ship MCP servers that support both current and future clients.

We are committed to supporting every team adopting MCP. Explore the MCP Adapter and try the Next.js MCP template to build your MCP server.

MCP Server with Next.js

Get started building your first MCP server on Vercel.

Deploy now